Facial recognition uses the biometrical information, face, to identify or authenticate a person.

Static Facial Recognition and Dynamic Facial Recognition

Static facial recognition takes the picture of a person as input to the facial recognition system. It’s like facebook application, where user tags face. In another application, like police input a photo and search the database to find who he/she is. In both case, the input is a photo. The process of static facial recognition involves detecting the face, aligning the face to nominal position, extracting something called feature vector (we will cover this later), and then comparing the feature vectors to those of database to tell who he/she is.

In dynamic facial recognition, we will identify the persons using cameras pointing to a group of walking persons or from the video files containing a group of people walking. The process of dynamic facial recognition consists of face detection, face tracking, and selecting the best available faces from each tracker, and then aligning each face to nominal positions, extracting feature vectors for each face and comparing the distance of the feature vectors to those in the database.

1:1, 1:N, Small N, Large N

The authentication problem is a 1:1 problem. It tries to verify that you are you by checking the distance of the feature vector of the input face to that of the face in the database.

The identification problem is a 1:N problem. It tries to search a database of N faces to find the who the input face is or unknown, where the closet match still not exceeding the threshold. The larger the N is, the difficult the problem becomes. When N is small, like 50, we can use a relatively simple feature extraction model (we will discuss this later) to extract feature vector. When N is in the order 10000, we need relatively complex feature extraction model. When N is in the order of millions, or even billions, we will need a very complex feature extraction model, which has very high discriminating power. In todays hardware, when N is smaller than 20000, we can implement the face feature vector extraction on the edge device, and has a fancy name: edge computing. LinkSprite 7″ access control tablet does the facial recognition locally and can recognize 20000 subjects. When N is larger than 50000, we will need to do feature extraction on PC or on the cloud. Note this is not just because of the memory space to hold the database, its actually the computation resources to compute the feature extraction model.

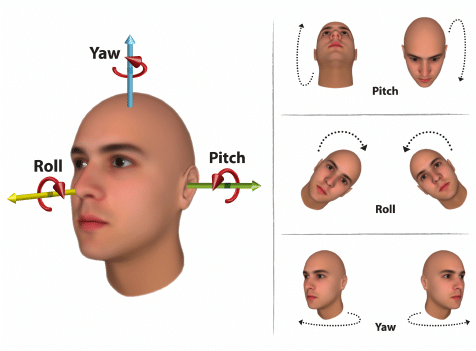

Large Pose

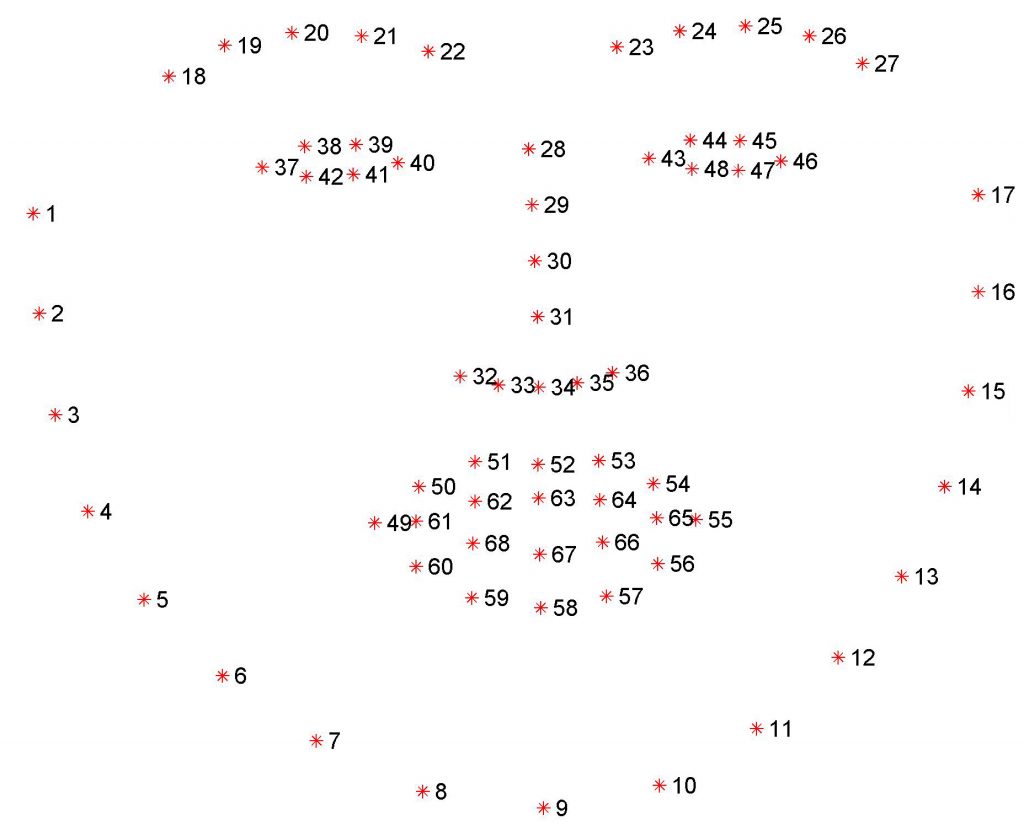

Ideally, we would like to do facial recognition using the frontal view of the face. The appearance of human face changes dramatically from different view angles. This makes facial recognition very challenging as even human can sometimes difficult to recognize same person from a different view. The terms of faces can be illustrated below.

We can see that roll is just an in-plane rotation. It can be easily rectified by detecting the landmark of face and rotating the face.

For other large poses, 3D face modeling and 3D landmark can be used to reconstruct the frontal face.

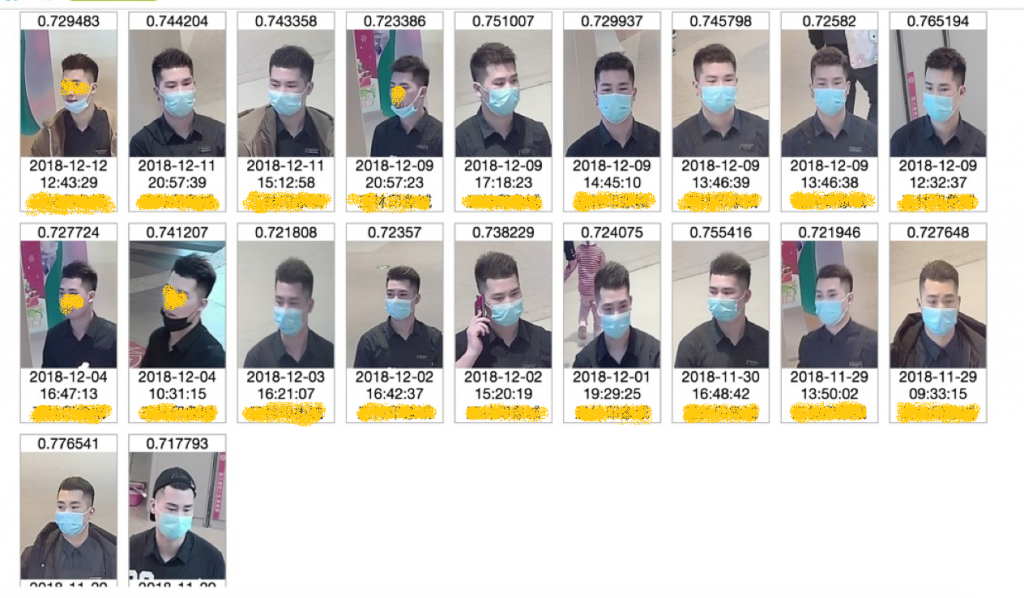

Obstructed Facial Recognition

In many cases, person will wear sunglasses or mask. To do facial recognition on these faces, it will require extra steps removing the obstruction, and reconstructing the face. LinkSprite facial recognition takes great care of these cases. The following shows that a person is clustered as same person even with and without mask and large poses.

Anti-spoof or Liveness Test

For facial recognition where the application will grant access to the subject, we will need anti-spoof or liveness test to prevent accessing using photo or video replay. There are a wide range of anti-spoof measures. An old fashioned anti-spoof will require user to blink or smile or shake head. This is very time consuming and not user friendly. A new class of anti-spoof is called static anti-spoof, i.e. the user doesn’t need to move head, etc. Among the static anti-spoof measures, the most expensive one will add a structured depth camera to sense the 3D depth information of the face in front of the camera to tell if this is a real person or a spoofed one. Then it is followed by infrared camera. Basically it uses an extra bandwidth to check if the face is real or not. Most recently, the industry is researching very actively on anti-spoof just using the same RGB camera that is used to do facial recognition. LinkSprite has a world-class RGB based liveness test, and can be obtained at LinkSprite open AI platform.